Git is a distributed file version control and collaboration system that was developed in 2005 by Linus Torvalds. The developer explains its name (git) with a bit of sarcasm: «I am a selfish scoundrel, and therefore I name all projects after myself. First Linux, now git.»

Now Git is the most popular and free tool that is a set of command line utilities. It allows to track the history of software development and work with entire teams from anywhere in the world on one project. Each change is added in the form of commits that allows to track, revert the change if it’s necessary and revert to previous versions.

In addition to being comfortable, flexible, and able to maintain development history, using Git greatly reduces development errors and data loss. Similar SCM version control systems are Mercurial, Subversion, Darks, Bazaar. However, Git has some advantages:

- high speed and transparency;

- ease of use in non-linear development;

- efficiency for projects of any scale, including large systems with 1000 participants;

- saving snapshots of repositories that ensures high speed;

- the ability to restore the code from a local copy in case the server with the remote repository fails;

- the ability to quickly download code from a remote server if the local copy is damaged.

Git main tasks:

- saving code and changes history;

- saving information about the user who makes changes to the code;

- recovery of any code version;

- consolidation of different versions, version changes;

- preparing code for release.

The use of Git is carried out with the help of special hosting and repositories.

GitHub was created in 2008 and bought out by Microsoft for $7.5 billion in 2018. GitHub is a source code hosting site and a large social network for developers with 20 million users who can view each other’s code, help to develop, and leave comments, and 80 million repositories worldwide. Users can create their own repository and publish their work. Free use is possible only for public open-source projects. The platform is written in Ruby on Rails and has a large number of open-source projects available to the public.

GitHub benefits:

- free service;

- high search speed in the structure of repositories;

- a large number of users, which ensures a quick search for help in solving a problem;

- availability of practical tools for collaboration and integration with Git;

- easy process of integration with other services;

- the ability to work on TFS, HG, SVN.

GitLab is an alternative to GitHub and is a web repository that provides free public and private repositories. GitLab was developed by 2 Ukrainians: Dmitry Zaporozhets and Valery Sizov, using Ruby and some parts of Go. The architecture was later enhanced with Go, Vue.js and Ruby on Rails.

GitLab is a complete DevOps platform for project planning, source code management, monitoring, security, and more. It also offers wiki hosting and a bug tracking system. Using GitLab significantly shortens the product life cycle and increases productivity. This, in turn, adds value to the customer.

Gitlab benefits:

- ease of setting;

- user-friendly interface and tools;

- unlimited number of free private storages;

- the ability to integrate a large number of APIs and third-party services;

- reliability and non-failure operation;

- the possibility of self-hosting;

- open-source license.

BitBucket is an analogue of GitHub that is designed for hosting projects and their joint development. The service was developed by the Australian company Atlassian, which created Jira and Confluence. It allows to create public and private repositories based on Git technologies for free, has the ability to integrate with Jira and Snyk, and built-in CI/CD capabilities. The service is a great solution for small teams.

BitBucket benefits:

- unlimited number of open and private repositories;

- support for public and private Git repositories;

- possibility of integration with other solutions (Jira, Trello);

- the ability to integrate with third-party services (Twitter, Google Analytics);

- the possibility of using the service for more than a million teams with 10 million developers around the world.

Jenkins is an open-source system based on Java. It provides automation of software development process parts without involving people and the process of continuous software integration. Jenkins is widely used in companies where there is a need for automatic applications deployment. The system is free, runs on Windows, macOS and other Unix-like operating systems, as well as has integration with Docker and Kubernetes. Jenkins does not contain a repository with source code, but connects to existing ones via web hooks. Thus is a universal tool for CI/CD regardless of the chosen hosting of remote repositories.

Jenkins benefits:

- free use;

- wide possibilities of integration, large plug-ins library;

- large and active community;

- distribution of load on different machines;

- great option for large projects.

QOps can work effectively with all the above services.

GitHub and GitLab include the ability to install runners that will later execute commands from a .yml file included in the source code repository when a certain event is reached. Usually such an event is sending source codes (push) or merging branches (merge) in a remote repository. At the same time, the syntax for compiling .yml files is slightly different, although it describes the same essence of runner behavior and managing the process of application building, testing and subsequent deployment. Both hosting systems allow to install their runners in a Windows environment that is not yet available for BitBucket hosting.

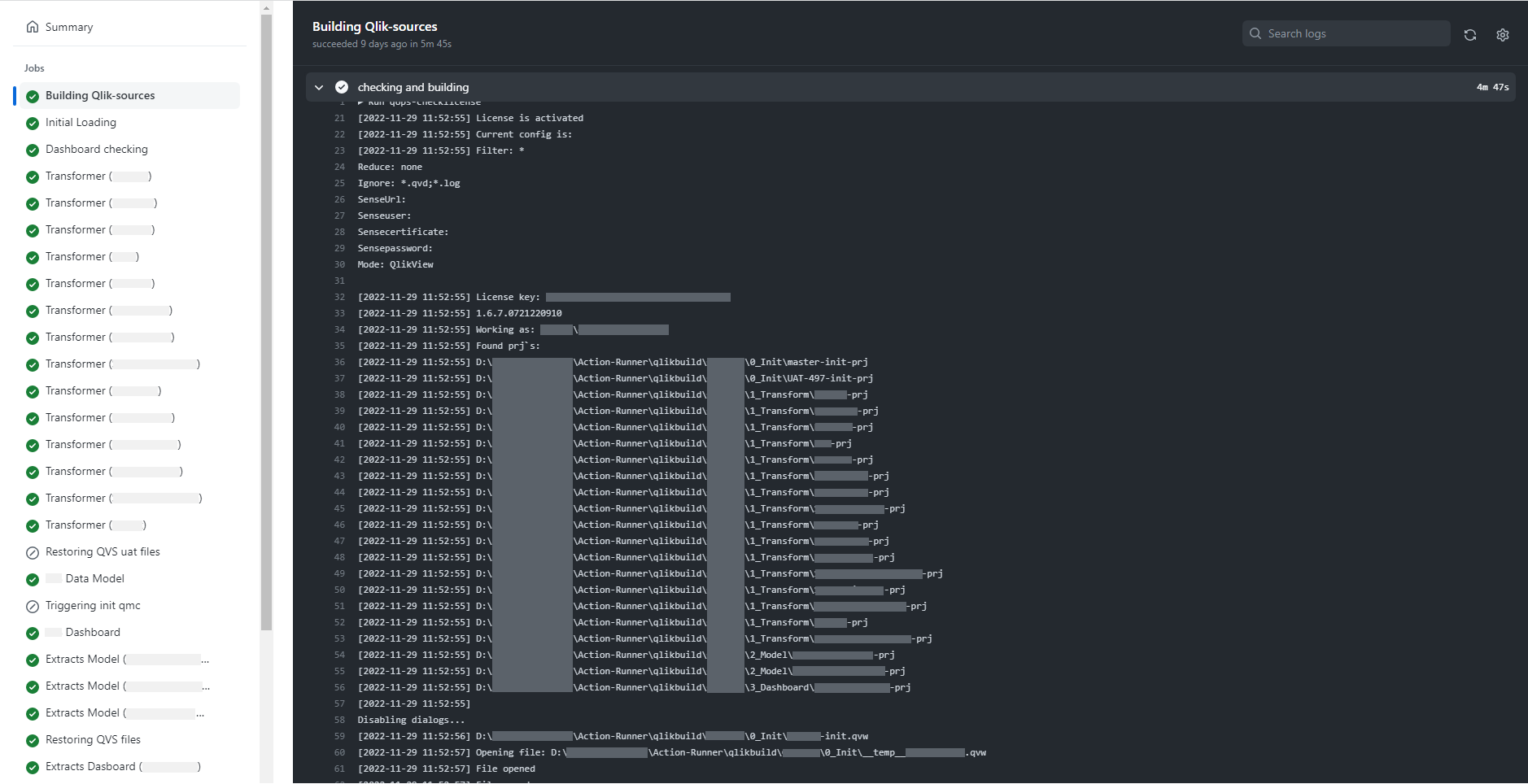

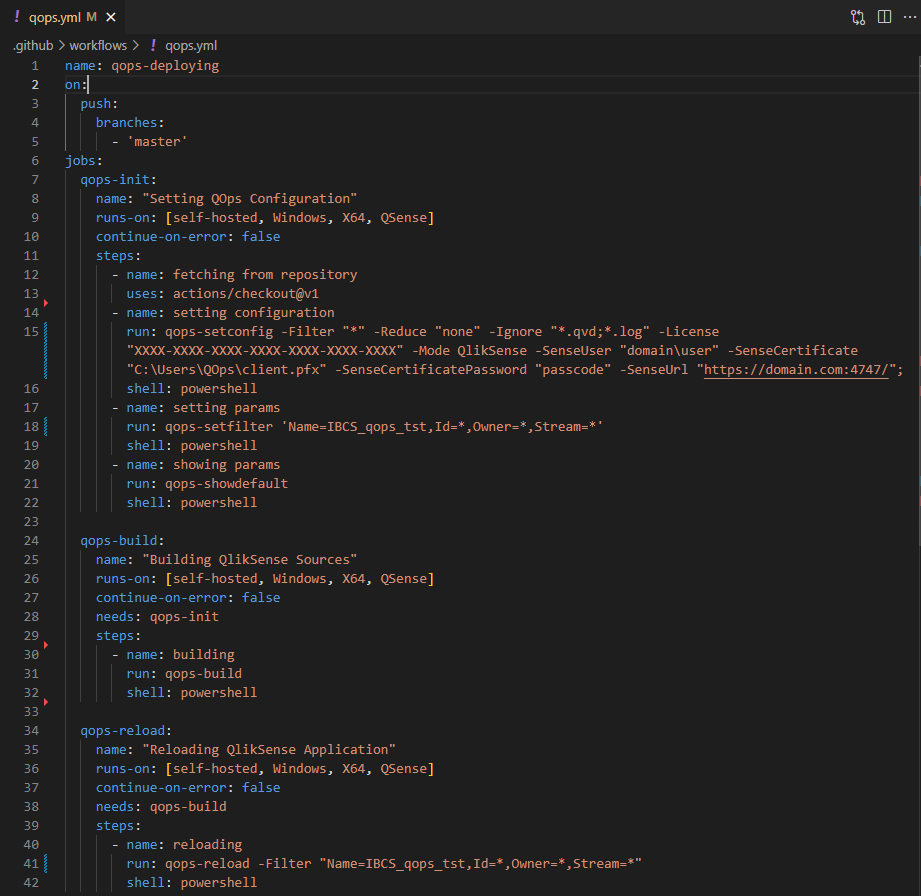

Below is QOps usage with the above systems. For GitHub, a .yml file is a structured steps set that consists of successive steps. The example below describes 3 steps – init-build-reload.

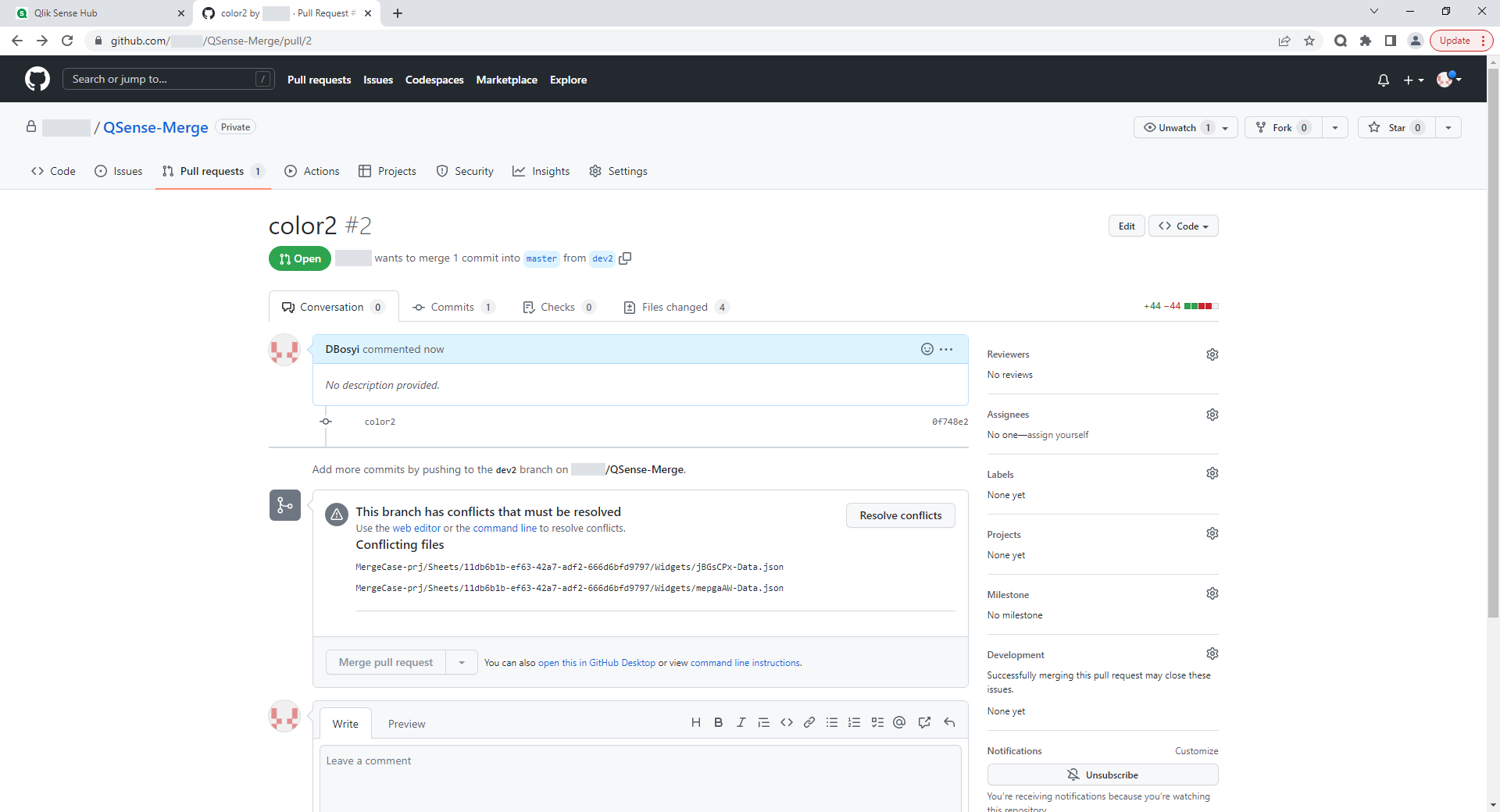

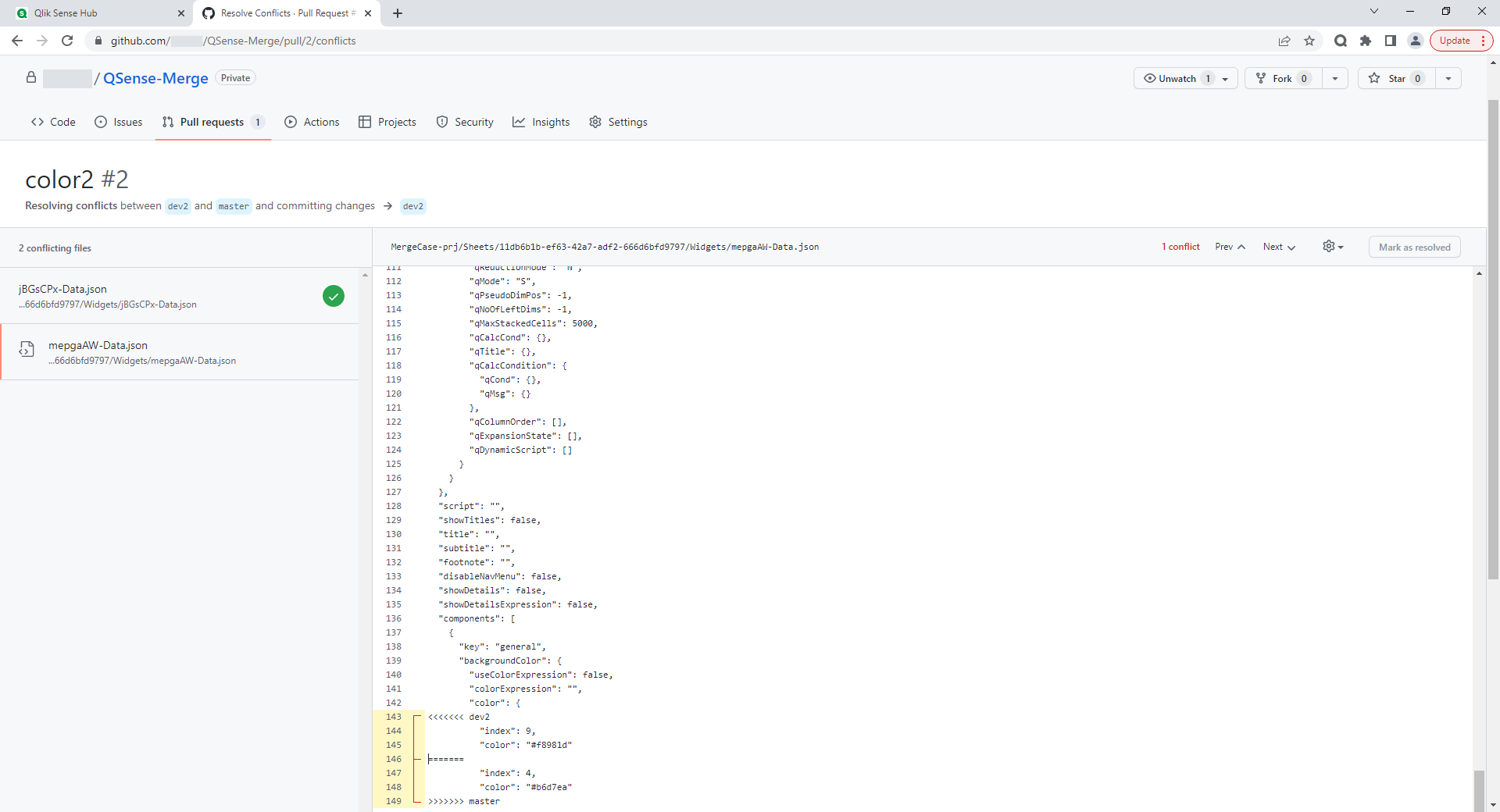

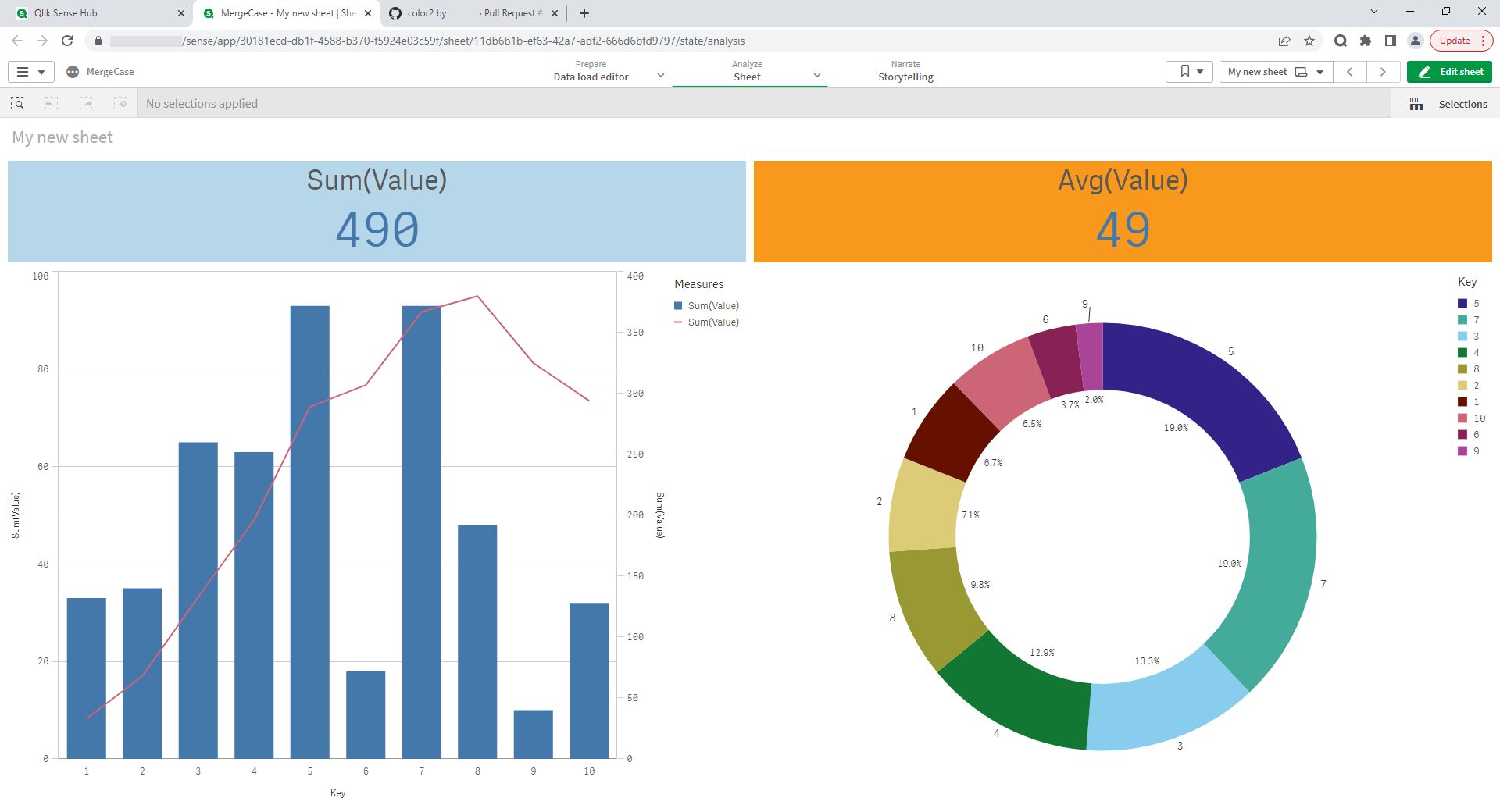

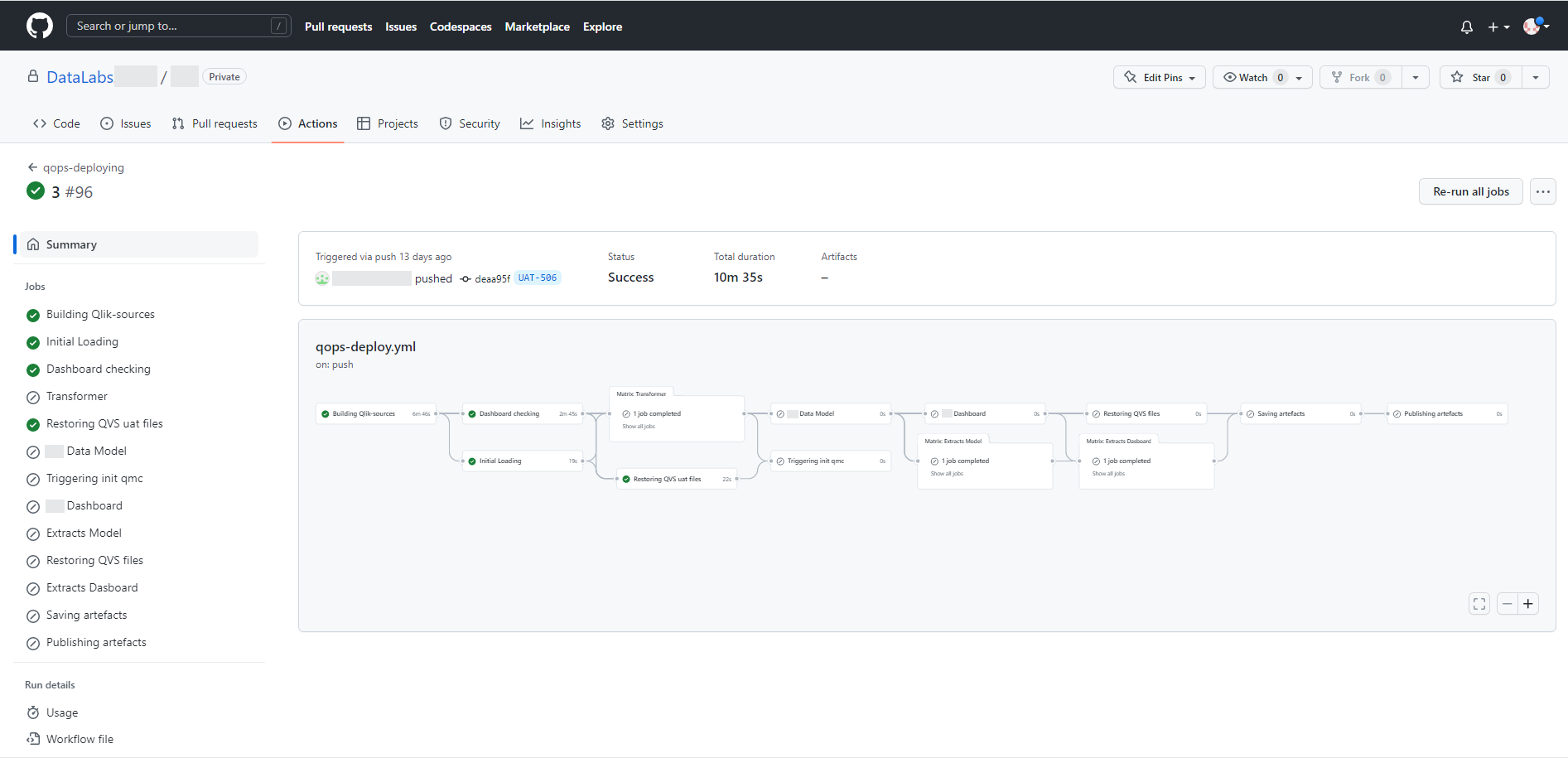

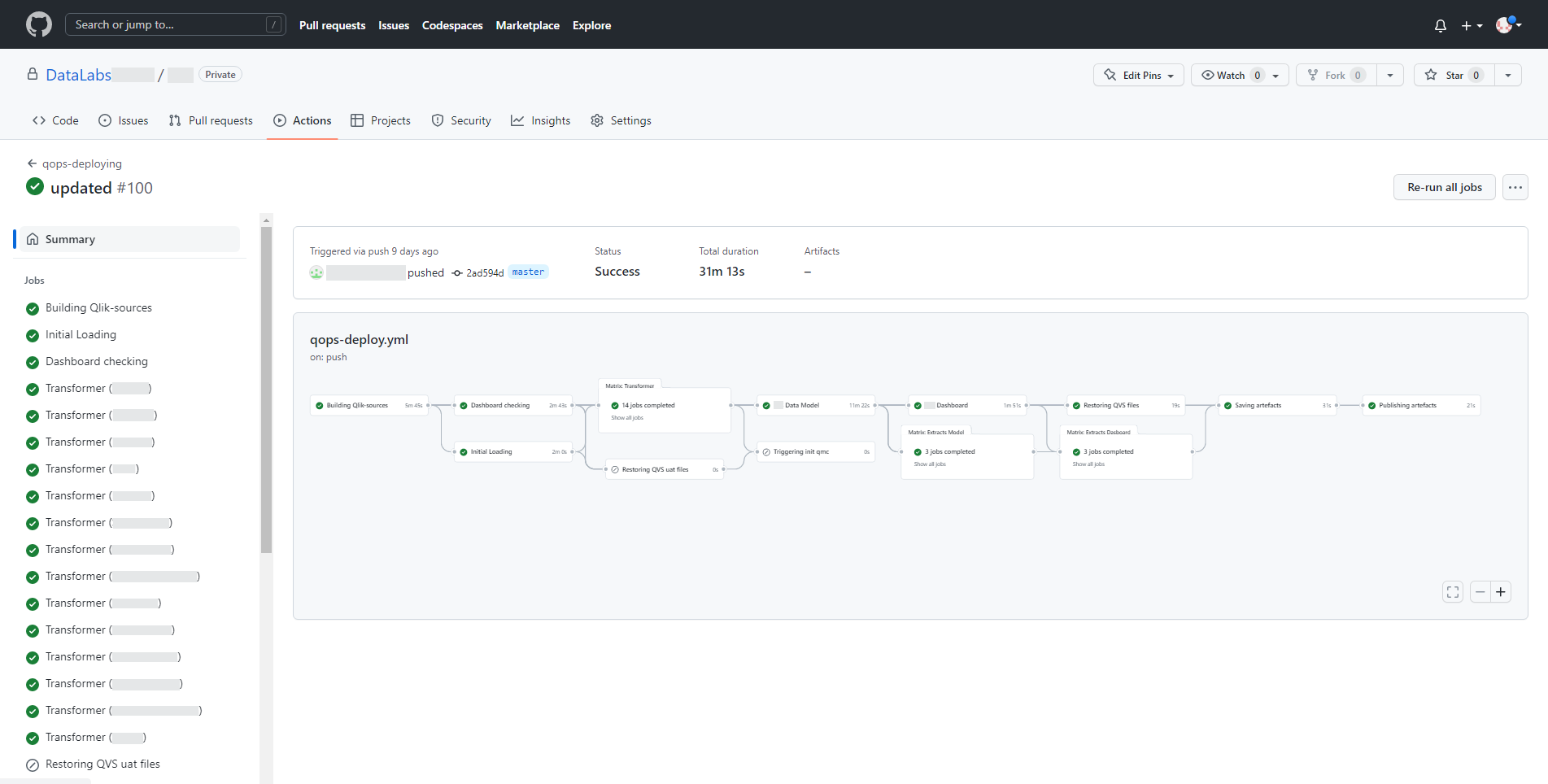

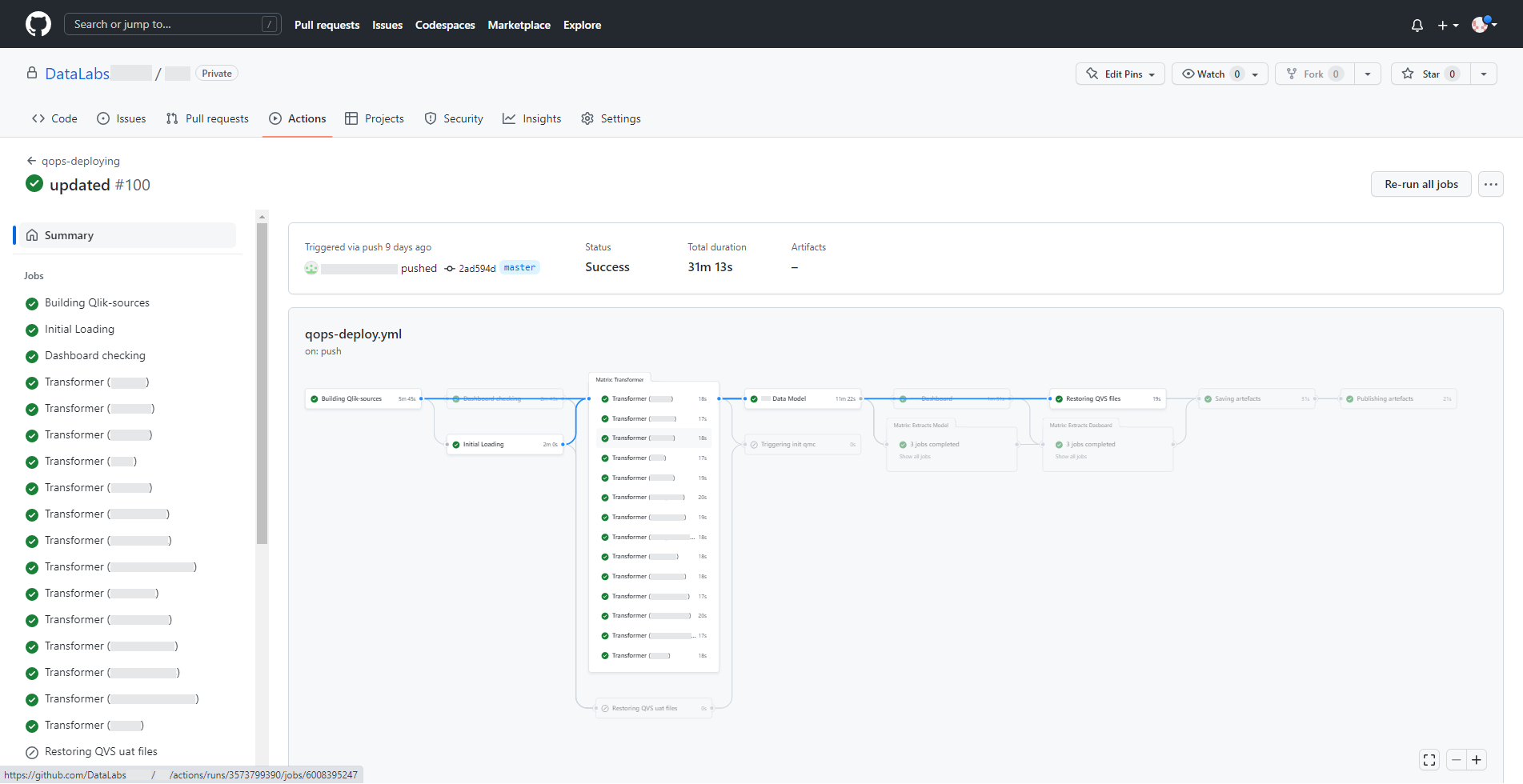

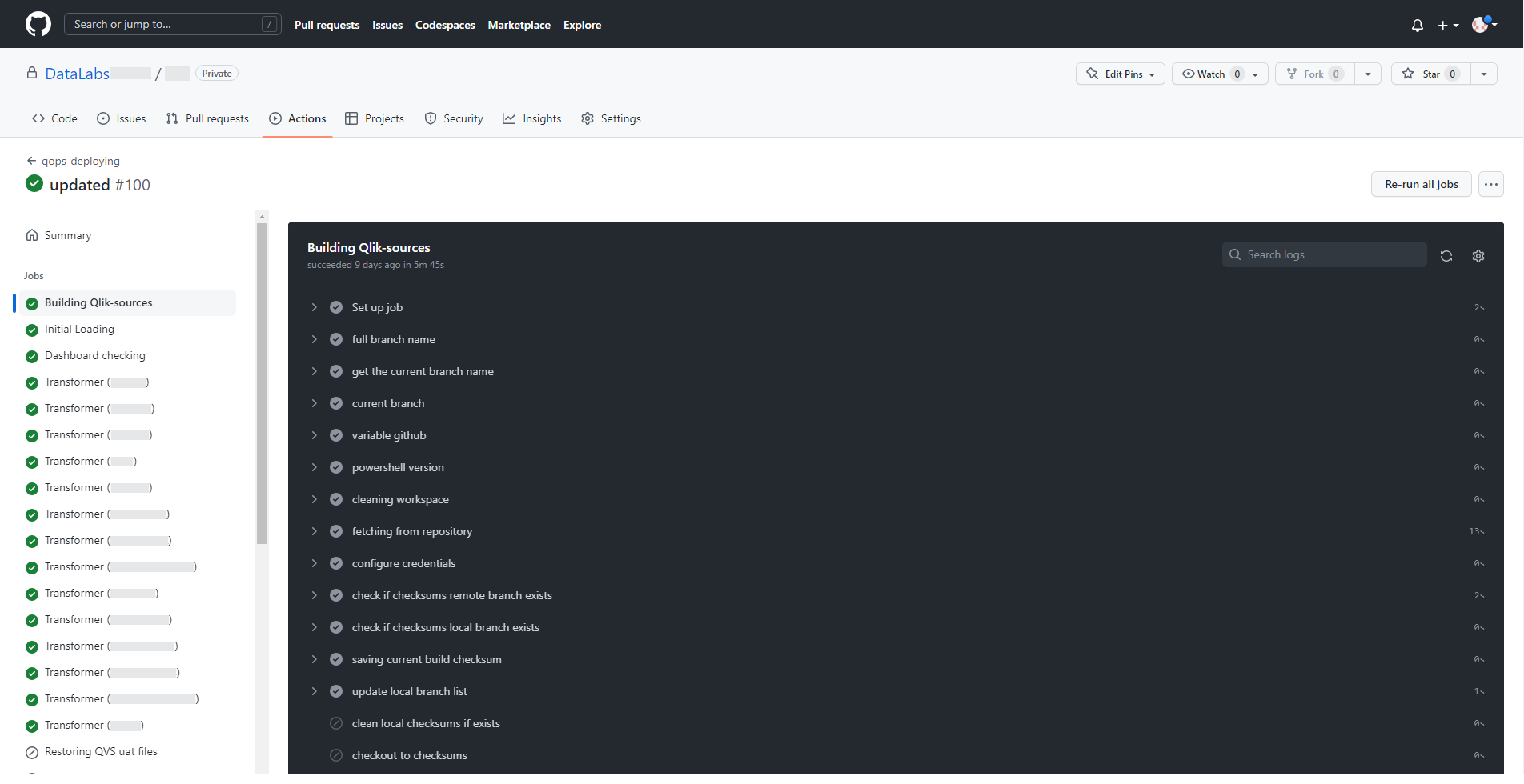

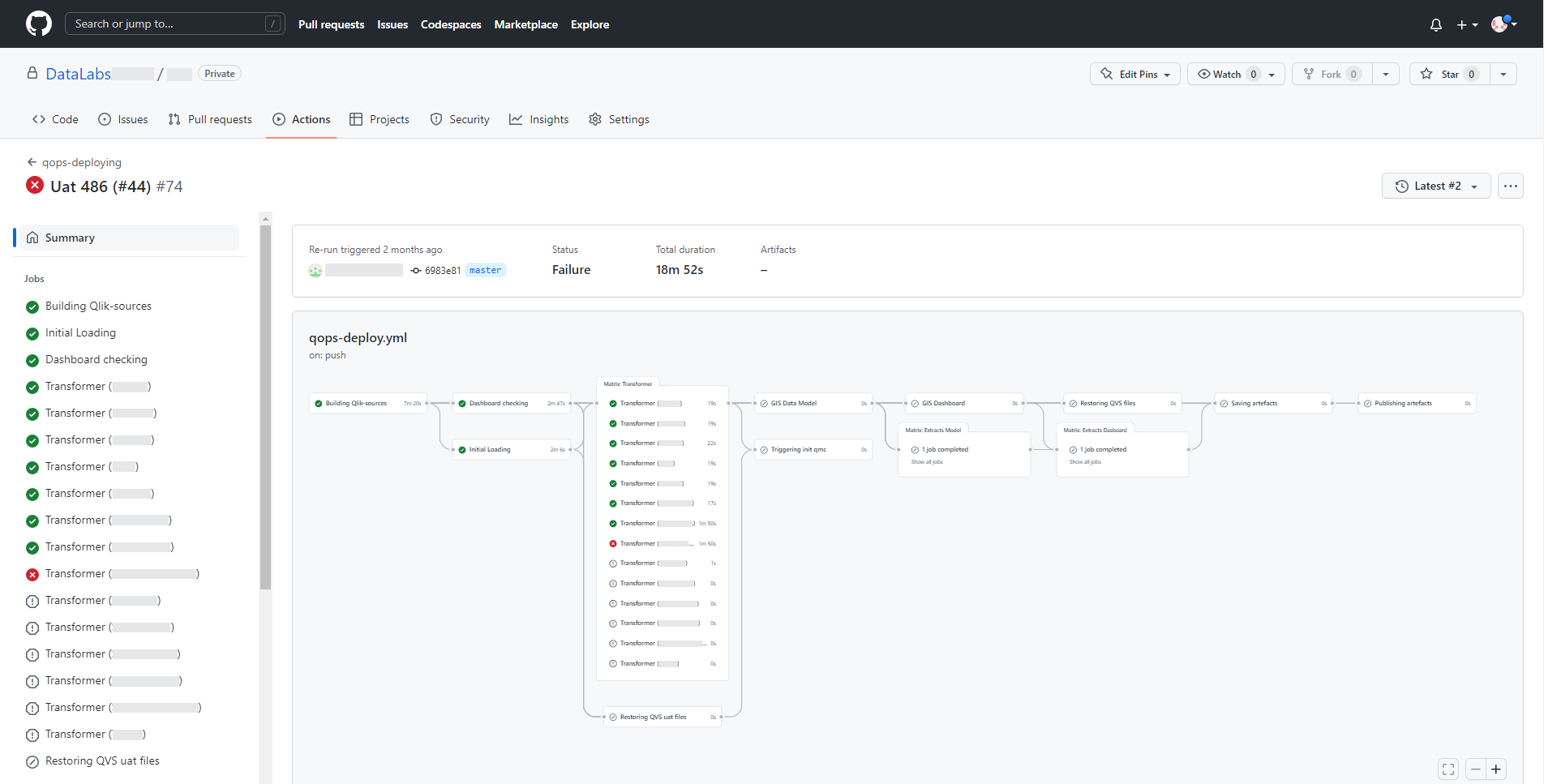

Successfully executes pipeline looks like this.

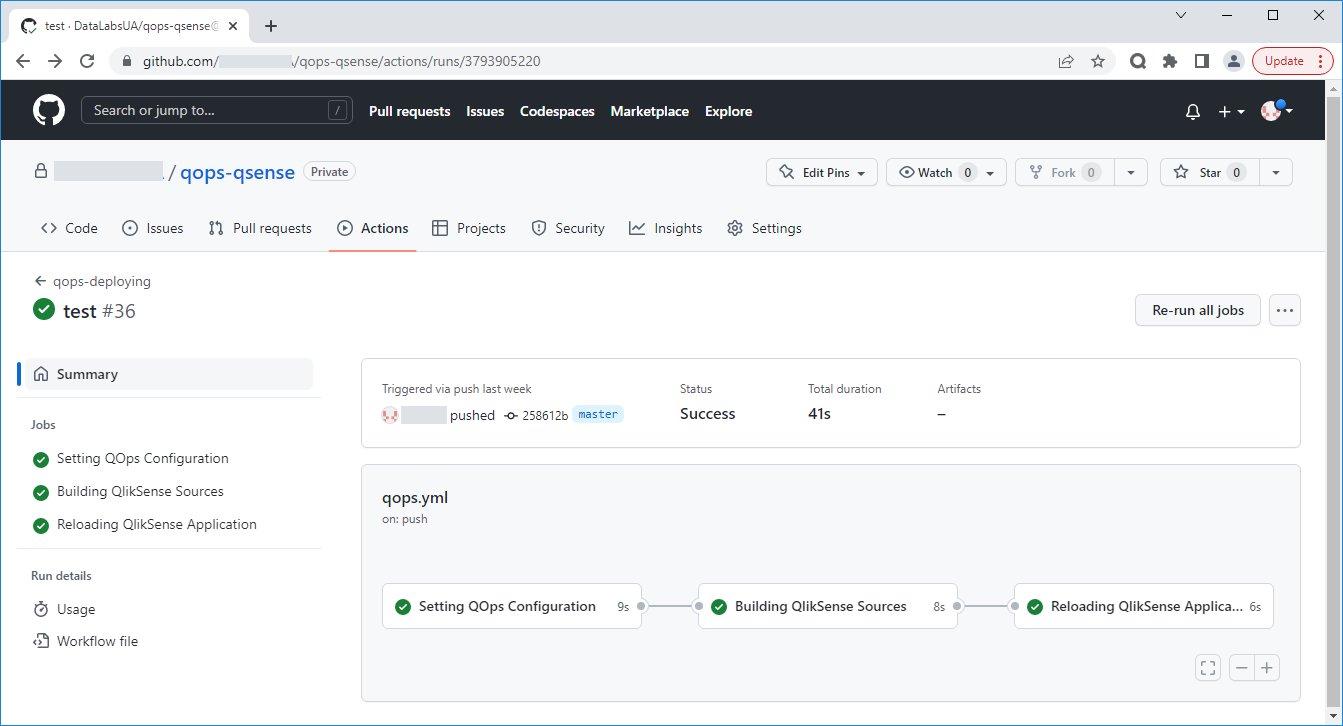

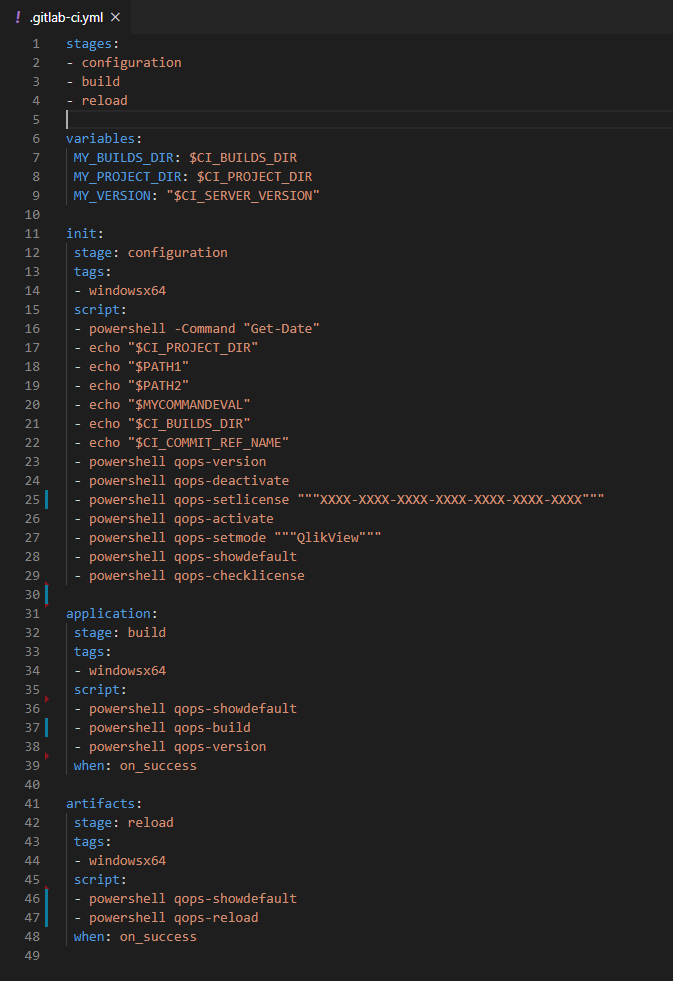

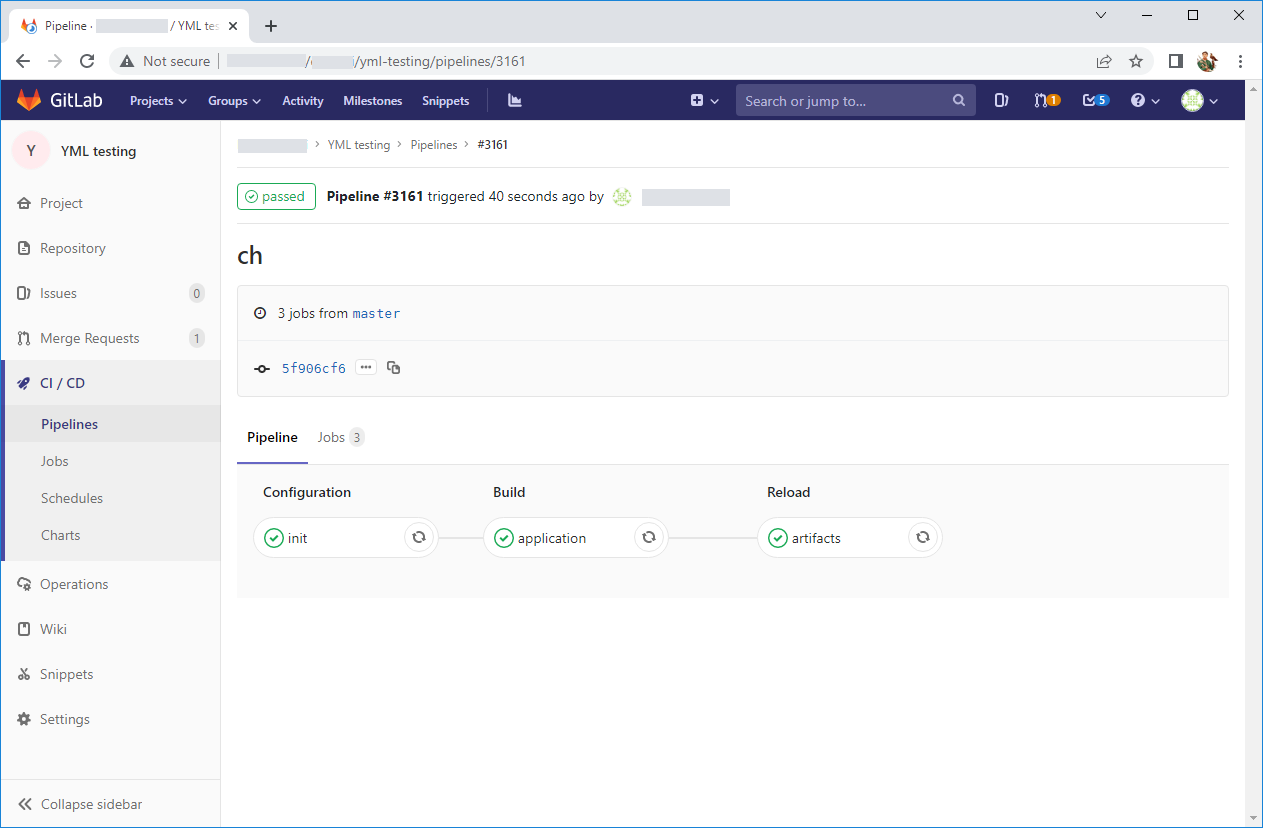

For GitLab, the .yml file is the same structured steps set, the differences are a different name for the keywords and more flexible work with variables. Unfortunately, developers don’t use a unified .yml file format and there is no interoperability between them.

Upon successful completion, a pipeline view consisting of the same stages is presented below. It is worth noting here that GitLab has more developed interactivity. For example, in the pipeline, it’s possible to set up manual confirmation from the user.

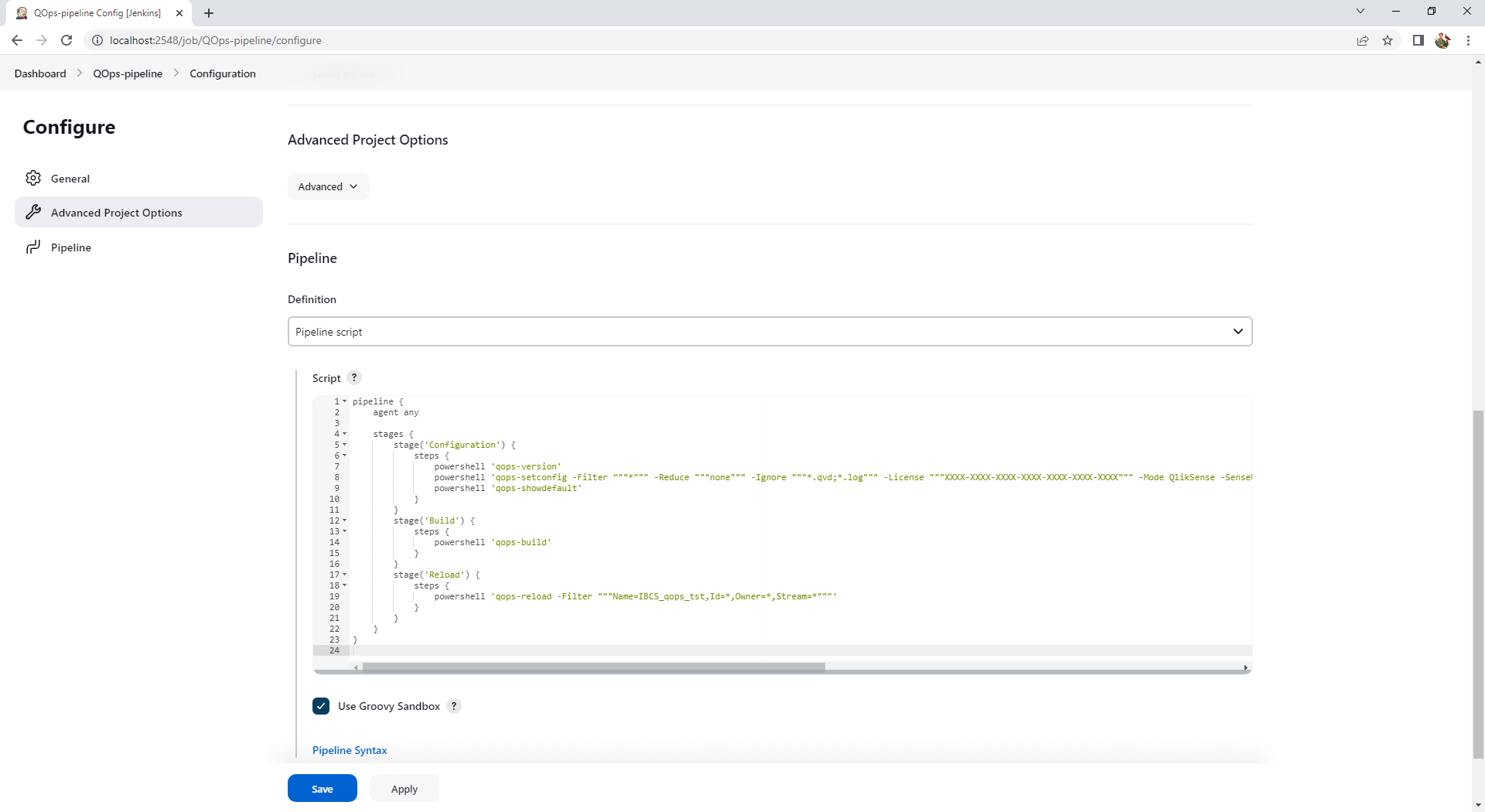

QOps currently works exclusively in the Windows environment. If BitBucket is chosen for source code hosting, the automatic pipeline can be developed using the Windows version of Jenkins. The versatility of the latter and plugins variety allows to link any remote repository through web hooks. The pipeline structure is written as a JSON object, if configured through the interface of Jenkins itself. And for our example it contains all the same 3 stages – Configuration-Build-Reload.

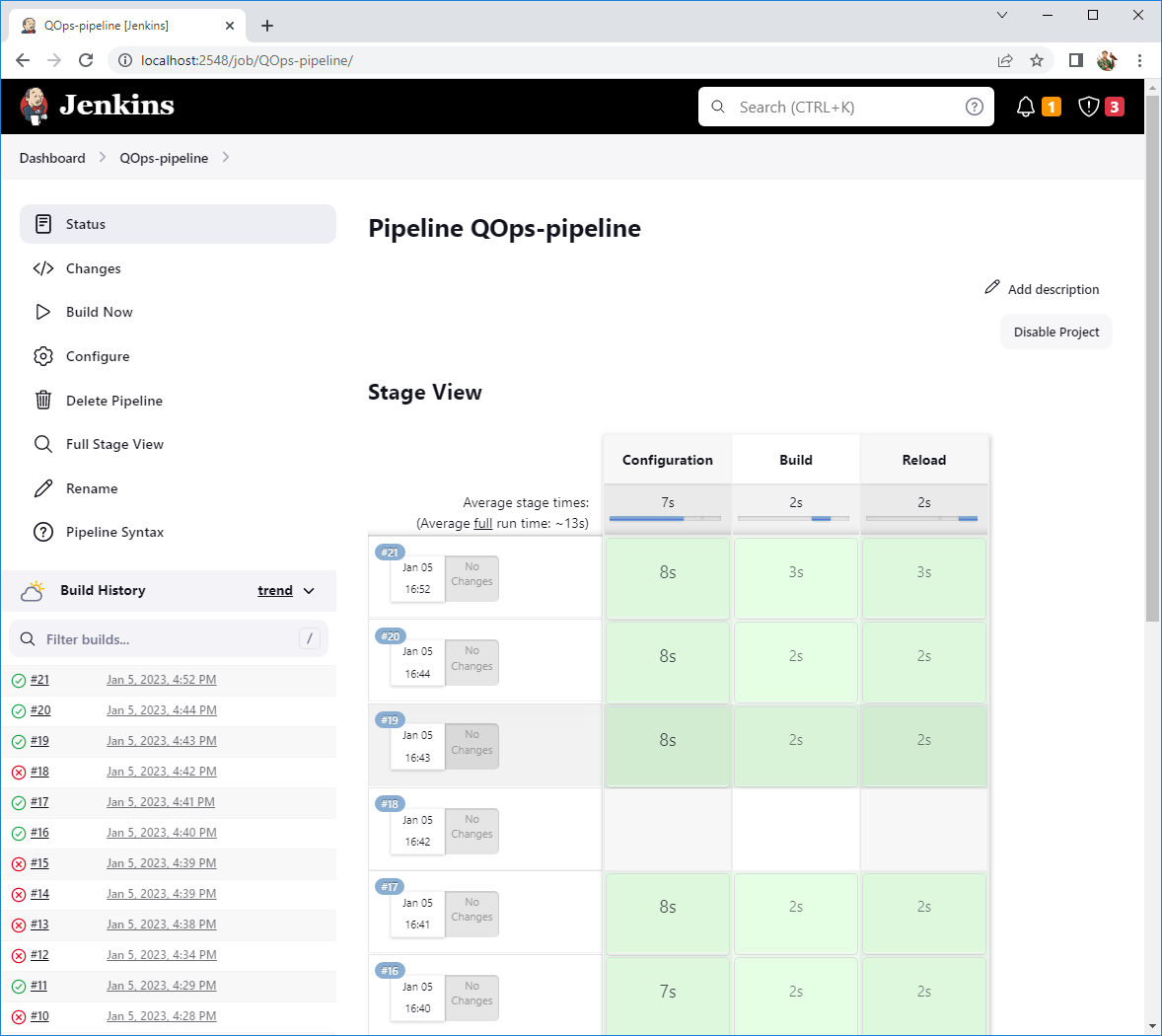

The result of successful pipeline execution in Jenkins is shown below. At the same time, the interface compares the results of previous launches in an interesting way.

More information you can find at the link