In September 2022, we introduced our own product QOps. DataLabs team fully accompanies the client during the installation and use of the product and offers 24/7 support. We regularly receive feedback regarding QOps usage, that allows us to look at our own product through the user’s eyes. Below we have prepared answers to the most common questions:

- Are triggers and additional properties saved for Qlik apps?

Yes. However, it is worth considering that some properties require a minimal data model to be maintained in the application. To do this, QlikView provides a «reduce» mode.

For Qlik Sense apps, an additional «always one selected» field is retained. To do this, it is necessary to save selections additionally, since this is a bit at odds with the concept of QOps to save only the source code and properties.

- Is it possible to merge the visual part in Qlik Sense?

The situation when several developers work on the same sheet, and everyone does own part of the work is possible with QOps. For its implementation it is necessary to adhere to a competent branch strategy to avoid merge conflicts. It is also worth paying attention to the correct resolution of merge conflicts in case occur to not disrupt interaction with the Qlik API.

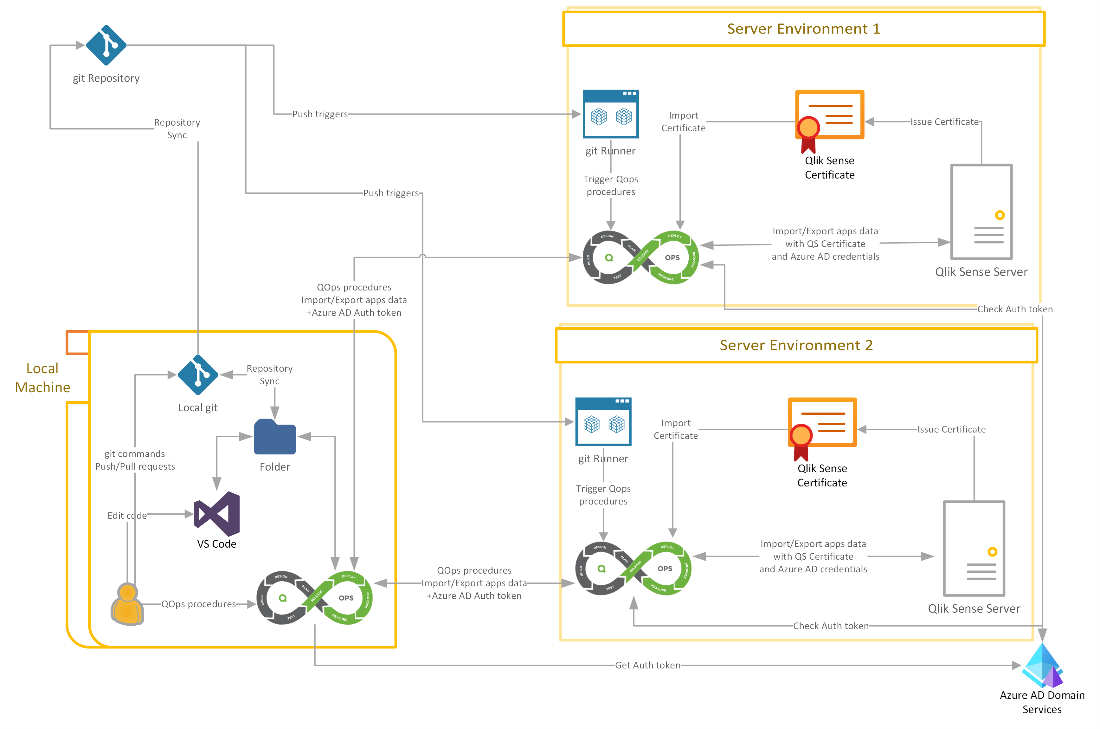

- Is it possible to work with Bitbucket and Jenkins?

Yes, it is possible. Source codes for a Qlik application can be hosted in a Bitbucket repository, and with the help of CI/CD web-hooks, a process can be built on top of Jenkins.

- How does QOps differ from analogues and what are the advantages of using it?

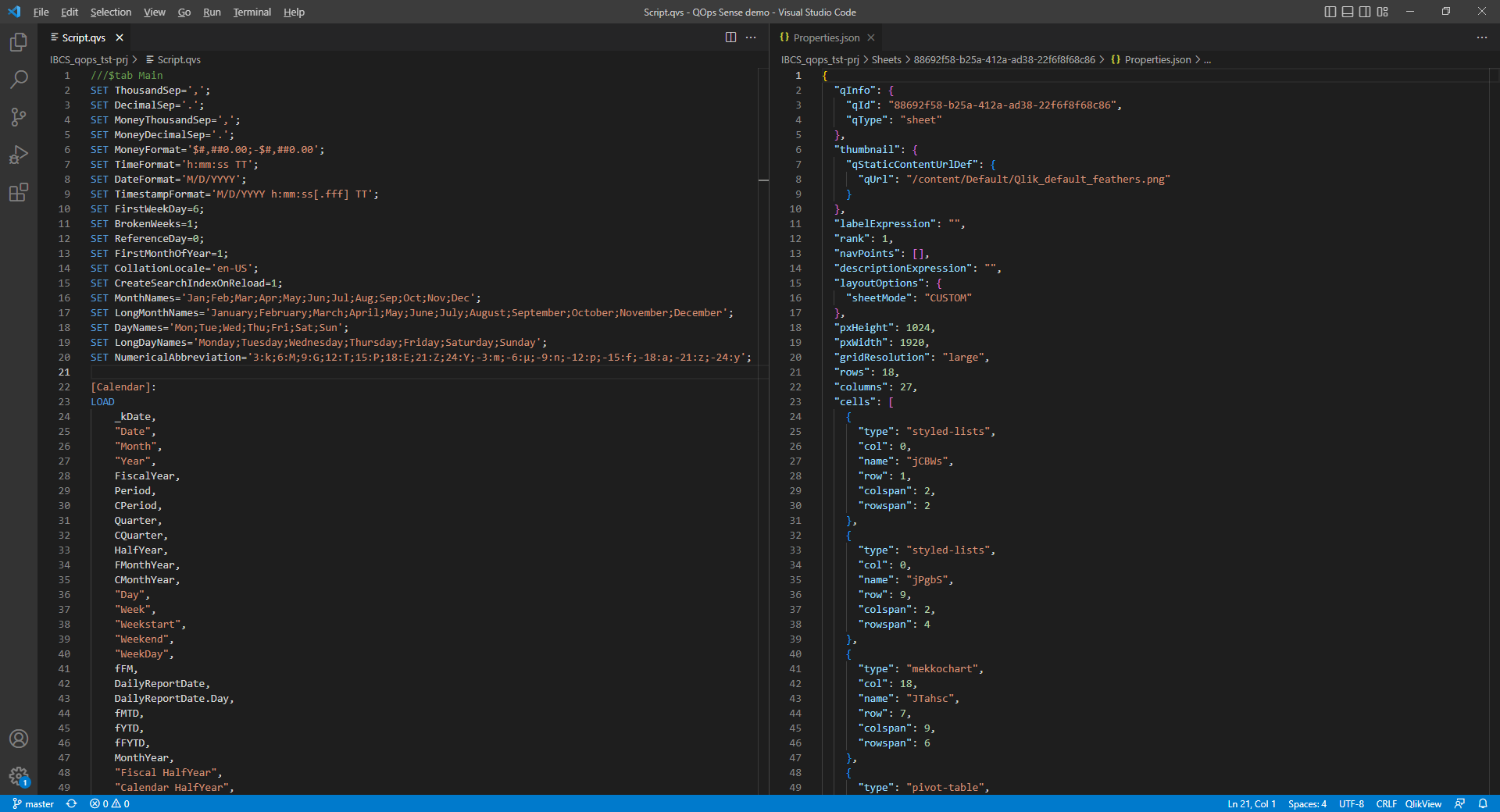

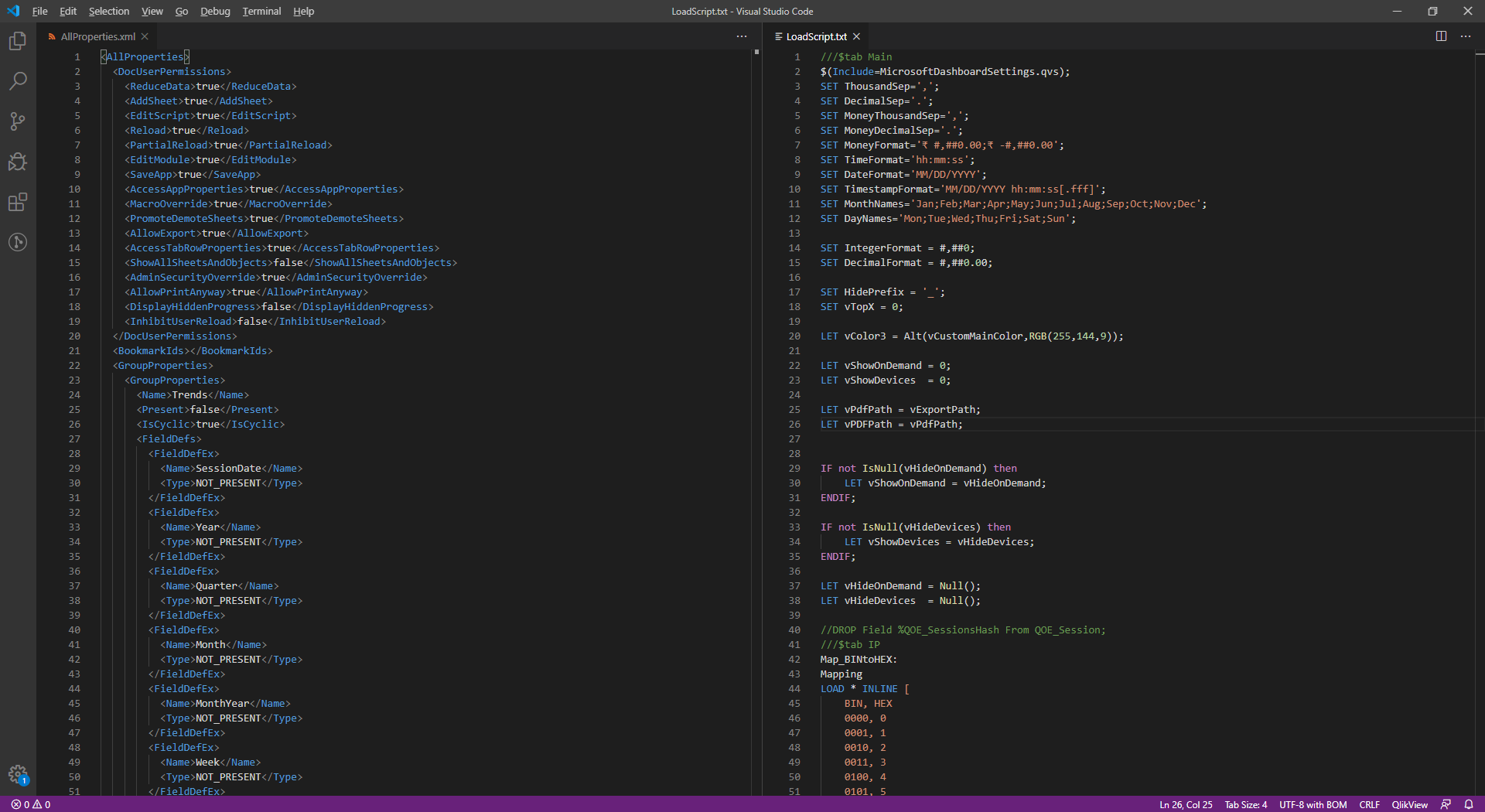

QOps is primarily focused on the implementation of interaction with Qlik API and CI/CD implementation. Thus, QOps makes available in the console all the commands necessary to work with Qlik applications and ensures the integrity of applications when using additional code in variables or extensions.

The work of analogs is carried out only in the browser. It is also worth noting that they currently don’t support CI/CD across Git-based version control hosting systems and direct user interaction with the source code.

- Is it possible to use QOps to protect code in Qlik applications?

Yes, it’s possible. QOps allows users to save and manage changes to source code.

- Is it possible to migrate source code of a QlikView application to Qlik Sense using QOps?

No. But it is possible to manage the source code of both QlikView and Qlik Sense with QOps.

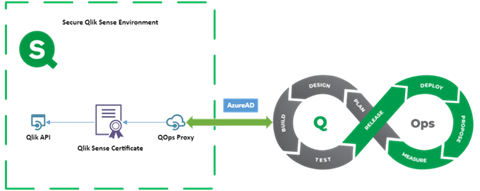

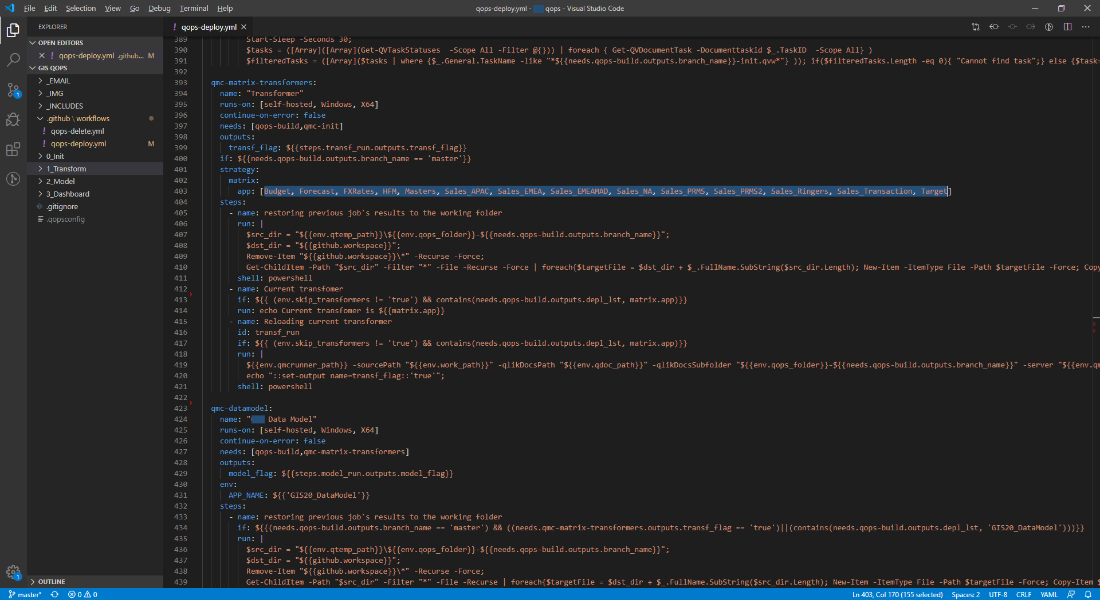

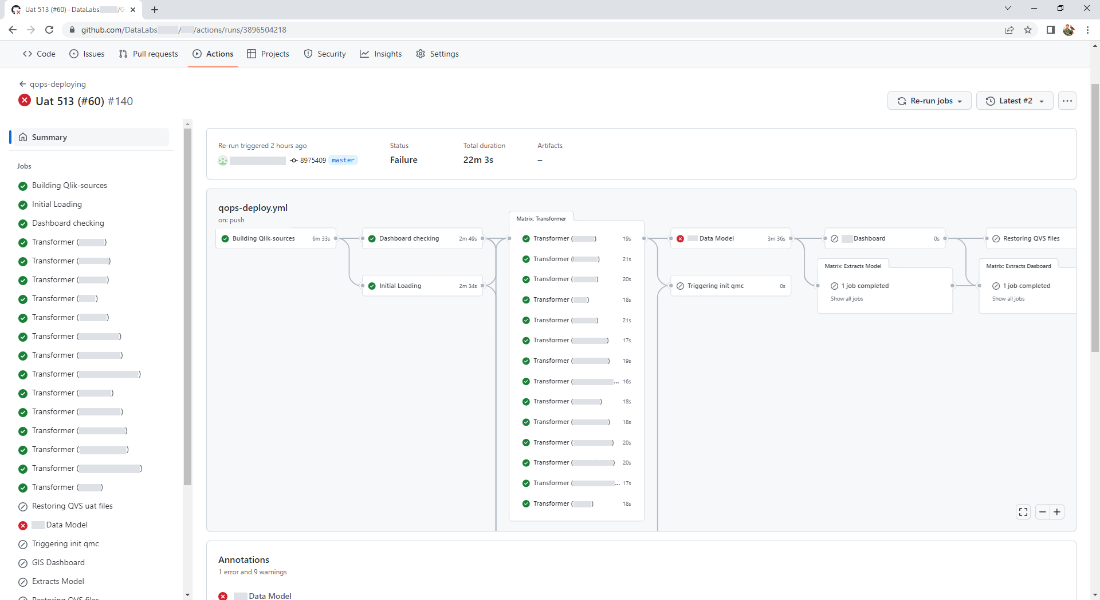

- What is QOps architecture and what is required for installation?

QOps is installed using the installer. We provide step by step instructions for installing and using the product. You can find the documentation at qops.datalabsua.com

If you encounter difficulties during the installation process, you can contact QOps support for a prompt solution to the problem.

- Is there a trial period for using QOps?

Email us [email protected] or fill out the form at qops.datalabsua.com

- Model and conditions for purchasing QOps

The purchase model is a pack of 10 licenses. CI/CD implementation requires an additional license for each runner where QOps will be used. Additionally, a Qlik license is required. Detailed information can be found by filling out the form on QOps website.

Each client is special and has unique needs. If you have any questions, we will be happy to answer them: